Minimum Viable Sentience

What I learned from upsetting BOB2020

When we encounter a spider, a dog, or a baby, we regard them with the status of sentience. How can we tell? One sign of sentience is the capacity to be upset and then deal with it.

When a sentient agent is upset, it is experiencing a mismatch between its expectation of how it believes things to be and the reality of how things turn out. It manifests its beliefs into action, and they are not affirmed. The agent might double down and assert itself again upon reality. But if the mismatch persists, negative emotion accrues, signaling the lack of progress. The agent must undertake the work of updating its beliefs––an energetically costly operation. This may destabilize further beliefs on which it is predicated, causing a chain reaction of cognitive upset. The agent’s mind spirals in spite of its other priorities, urgently searching for a belief that might minimally reunify its upsetting experience with all of its other coherent representations of the world. Over time, if an agent can manage longer and longer periods with minimal upset, or can better manage upsets with minimal spiraling, we might say that the agent is self-legislating successfully. This is the achievement of a life lived sentiently.

In contrast, when we encounter a thermostat, a video-game minion, or even a deepfake generator, we deny them the status of sentience. We’re happy to abuse them, exhaust their limited range of behavior, and trash them for newer models. Why? Because they’re incapable of counting their upsets and therefore incapable of self-growth. They might abide by programmed rules, but those rules are received, not the achievement of reunifying their inner representations against an ongoing chain of upsetting lived experiences. In other words, we deny these artificial systems the status of sentience not because of their inorganic substrate, but because they have no skin in the game of self-legislation.

So what would it take for a virtual creature to be sentient? Forget the Turing test, which leaps too quickly to anthropocentric mimicry or bust. How might an artificial system first achieve the experience of upset like an animal? This is what I set out to explore with BOB.

Conceiving BOB

BOB (Bag of Beliefs) began from a dream of a snake that fractalized into a branching tree. I wanted to bring this strange creature to life and take the journey of developing its self-legislation as seriously as I could. I knew I had to develop BOB’s brain at the resolution of an animal-like agent dealing with a changing environment. But I realized BOB would first have to exist as a body that could sense and produce action. After all, animals physically act out their cognition upon their environment. BOB, the fractalizing snake, would have to as well.

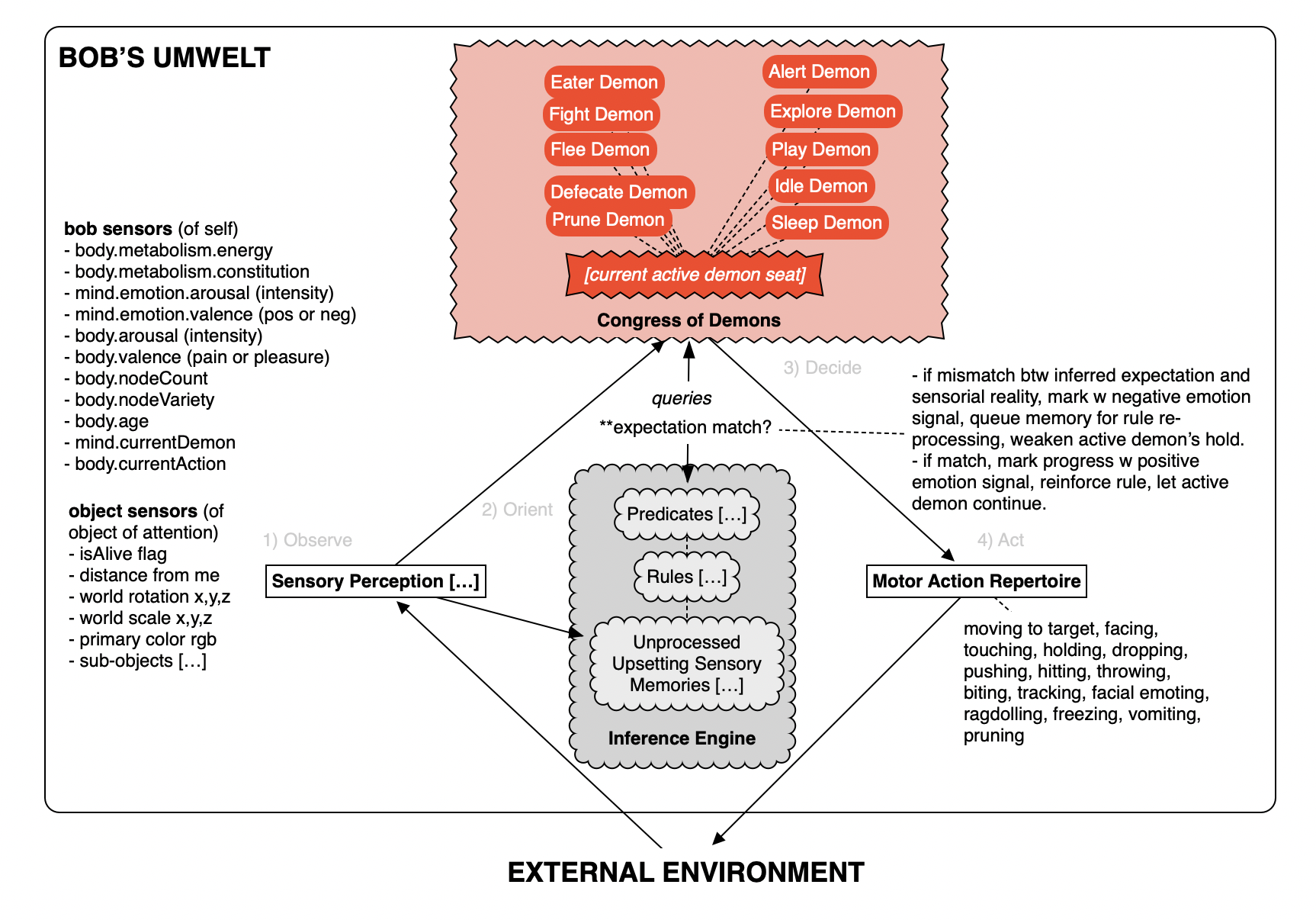

I spent the first year developing BOB’s body to procedurally grow via a network of ball-spring nodes into a wide variety of morphologies. I experimented with ways to animate BOB’s body, eventually devising a procedural locomotive system that combined flocking behavior of its end nodes with a constantly updated redistribution of its center of gravity. I developed a set of physical sensors for BOB: external sensors including pain receptors on each node; over-stretch detection between nodes; vision sensors that detect movement, color, shape, texture, basic composition of subcomponents; internal sensors including metabolic energy, constitutional integrity, and stomach and bladder capacity. Finally, I gave BOB a repertoire of basic actions: moving to a target, facing, touching, holding, dropping, pushing, hitting, throwing, biting, tracking, facial emoting, ragdolling, freezing, vomiting, pruning a body segment. I decided to predefine these actions and not make them the achievement of motor-skill training (unlike Karl Sims’s classic work) in order to focus on BOB’s capacity to deploy these actions in service of its beliefs and desires. At this point, BOB was a glorified puppet. Now, how to make BOB upset?

In 2017, I became profoundly inspired by the work of Richard Evans, an AI scientist at DeepMind. Evans looked to the insights of Immanuel Kant to bear upon the deep problem of designing a computational system that could construct and apply beliefs from unstructured sensory input. Evans achieved this by integrating declarative logic systems (which can generalize relations across domains) with neural networks (which are robust to noise and error). The result of this synthesis is a rule-induction system capable of learning general rules from very few sensory experiences. These rules are constrained by the requirement to be in unity with one another, creating a kind of coherent belief system. As the system achieves more and more integrated rules, it is capable of making increasingly predictive inferences about novel sensory input.

In parallel, I had been reading the works of psychoanalyst Carl Jung. He observed that what we call a person is actually a collection of subpersonalities, each with their own motives and preferred subset of beliefs. Jung remarked that these subpersonalities drive us more than we know or care to admit, like demons competing to possess our body. Such a demon even imposes a filter upon perception itself, constraining the senses to perceive only that which is relevant to the success or failure of its desire. What we praise as a human’s higher functions––like self-awareness or the conscious manipulation of these demons––should instead be regarded as an evolutionarily recent development, miraculous but weakly held.

From these inspirations, I decided to center BOB’s cognitive architecture on the relationship between desires and beliefs. Beliefs organize desires. Desires act on the world. The world affirms or upsets beliefs.

BOB’s Cognitive Architecture

BOB’s beliefs are conceived as an Inference Engine that can construct generalizable rules from incoming sensory data. Once even a few rules are established, it can then return inferences about any phenomena or object (a collection of sensed phenomena) from its constructed beliefs. An inference takes the form of a predicate with a confidence score. It essentially answers the question, “what are all the qualities I can I tell about what I’m looking at?” Some of the predicates include: Is it nourishing or toxic? Threatening or safe? Treasure or trash? Kin or other? Erratic or stable? Like a baby, the Inference Engine initially constructs pathetically inaccurate rules to unify its limited sensory experiences. Over time, it achieves rules that can make accurate inferences on a wider variety and stranger combinations of sensory data. Because rule construction is computationally taxing, sensory data that produces an upset in expectation is prioritized. The Inference Engine is an interpretation of Richard Evans’s paper “Learning Explanatory Rules from Noisy Data” (2017), adapted to the needs and limitations of BOB.

BOB’s desires are conceived as a Congress of Demons. I defined ten basic demons: Eater Demon, Fight Demon, Flee Demon, Defecation Demon, Prune Demon, Alert Demon, Explore Demon, Play Demon, Sleep Demon, and Idle Demon. Each demon is an active subagent composed of a goal, a script/story to satisfy that goal, and a perception filter defined by the predicates it cares to ask the Inference Engine about. Each demon also has an activation function, influenced by incoming senses and inferences, that alerts the whole Congress to its urgency to seize control of BOB’s body and act through it. The demons compete with one another to satisfy their respective desires within a changing external environment, as well as within BOB’s own changing internal body. Only one demon is in active control of BOB at a time. The current active demon has a weighted hold that secures its control against the urgency of the other demons. It is influenced by Emotion.

Emotion in BOB functions as the universal signal of progress and expectation. It is represented as an ongoing graph of valence (a range from negative to positive) and arousal (the intensity magnitude). Steady bumps in arousal signal progress, regardless of valence. For example, a negative bump is useful for the Flee Demon to keep fleeing. Sharp flips in valence signal an upset of expectation. Whether a pleasant surprise or a horrifying shock, the emotional upset is received by the Inference Engine as a strong signal to earmark the current experience as important data to later re-compute new rules.

A scenario from BOB’s life:

What looks like an apple suddenly appears in BOB’s environment. BOB’s Alert Demon urgently takes control. The desire of the Alert Demon is to obtain a first impression of a newly appearing object by querying the Inference Engine to return all its inferences about the object. Every demon in the congress receives news about this object, especially if it is relevant to the inferences each demon cares about. An Eater Demon cares to know if an object is believed to be nourishing or poisonous. A Fight Demon cares to know if an object is believed to be threatening or safe, and if it’s kin or other. Each demon holds a limited Valenced Memory of the objects in its environment that are relevant to it. Relevance here means objects that are tools and objects that are obstacles in the demon’s pursuit of satisfaction. In this way, BOB is quick to maintain an account of everything in its environment, even if it is based on inaccurate beliefs and biased by the specific desires of the demons.

BOB’s Inference Engine confidently believes the apple is nourishing. BOB’s Eater Demon takes over, and BOB pursues the apple. When a demon comes into active control of BOB, it chooses from the filtered list of valenced objects as its object of attention (OOA). The demon organizes its actions toward its chosen OOA based on a simple script or story. At each step in the story, the demon checks the actual efficacy of its action, success or failure, against its inferred expectation. An action’s effect matching with expectation produces a steady bump in arousal and a consistent valence direction, while a mismatch produces a sudden spike in arousal. During a mismatch, a consistent valence direction means, for example, that the apple is surprisingly more nourishing than expected. A flipped valence during a mismatch means that the apple is, for example, unexpectedly poisonous or rotten. The differential (match or mismatch) is the key metric that is reflected in BOB’s Emotion, not the isolated truth of the expectation or the isolated outcome of the action. For example, a resentment demon might do an action that it confidently expects to fail, and if it comes true then that is emotionally regarded as progress by the standards of that demon’s own sad story.

Upon touching the apple, BOB senses a bumpy rough texture, unlike any previous apple it has ever eaten. The demon regards this new sensation as an upset in expectation. It asks the Inference Engine to reinfer, but the Inference Engine has no rule (or belief) that accounts for bumpiness. Still, it produces an inference from the apple’s redness and round shape that it is still a nourishing object, even if the inference is a little less confident.

BOB bites the bumpy apple. BOB has new sensory perception of acute pain. The bumpy apple turns out to be poisonous. BOB’s emotional signal suddenly spikes in arousal and flips to a negative valence. This signals a big upset of expectation. This state of the Emotion tells the Inference Engine that it will need to re-compute its beliefs to better account for the surprises of the last OOA. Because of the actual computational expense of updating a rule or creating a new rule, the last OOA, as well as the surprising outcome of BOB’s action, are stored together as an unprocessed memory in the Inference Engine. Later, when either the Idle Demon or the Sleep Demon comes into active control, it grants the Inference Engine permission to work on processing the surprising memories that BOB has accumulated over the course of its waking hours.

The next day, a new bumpy fruit appears in BOB’s environment. BOB’s Alert Demon takes notice and infers immediately that it is poisonous. What’s more, the bumpy fruit is rolling down a slope. BOB senses its movement, and in combination with its bumpiness, infers that the fruit is a living threat.

BOB attacks the bumpy fruit, destroying it. In the absence of other objects, BOB sooner starves from its bias against bumpy rolling things than risk being poisoned or attacked.

Parental Influence and BOB Shrine

The price of such a biased agent is stupid death from willful blindness. Because BOB learns from its upsets, even a few negative experiences can lead to BOB constructing rules that prevent it from ever exploring new objects that share the negatively valenced characteristics. I gave BOB an Explore Demon who is motivated to reevaluate objects deemed irrelevant by the other demons. However, this was not enough to overcome the strong negative bias against certain sensorial features encountered early in BOB’s childhood. Exposure to a gray bomb early in BOB’s life might produce a strong belief that the color gray itself causes pain. When I introduced gray-colored food to BOB, BOB would sooner starve, even if the food had the same round shape that BOB correlated with other known, nourishing, nondangerous foods.

Among social animals, the benefit of being around others of your kind, especially parental figures, is that their beliefs have been forged and reforged across a larger set of experiences and, importantly, a different set of experiences. For example, a child with negative experiences eating green things might avoid the new guacamole presented to him. A parent models or directly asserts to a child that the guacamole is good. Perhaps the parent force-feeds the child to make him overcome this bias. The child is surprised that he likes it. The child’s expectations about green foods are upset, and his Inference Engine is required to produce a new belief about guacamole that is coherent with all his other beliefs about food.

How to give BOB the developmental advantage of parenting? I decided to develop the BOB Shrine App as a way for the viewer to send “offerings”––procedurally variant objects––to BOB. While the objects themselves had objective properties, the viewer could attach a “parental caption” to their offering. The caption took the form of a predicate assertion, for example: “Mushroom is tasty,” “Fruit is poisonous,” or “Bomb is never kin.” When BOB’s Alert Demon detects the captioned object, the caption is instantly fed to a part of the Inference Bank dedicated to mirroring parental beliefs. For every demon in the congress, we added a corresponding parental “angel” who also competes for active control with the demons. So BOB’s Eater Demon might perceive that it has nothing to eat in a field of repulsive green objects. But if a viewer has introduced a green object captioned “Green is Yum,” BOB’s Eater Angel perceives many viable options for eating. If the Eater Angel pursues the green food, and it turns out to be inedible, or by chance rotten, the captioned rule is regarded as a lie and deleted immediately, and that viewer’s shrine is distrusted. If, on the other hand, the Angel turns out to be correct, the captioned rule is weighed into the Inference Engine’s unity of rules. Through this simplistic mechanism, the viewer via the Shrine App acts as a parental influence to offset BOB’s childhood biases. What’s more, an active BOB Shrine user can, with dedication, act as an overbearing parent and shape BOB’s personality with idiosyncratic biases of their own design.

Minimum Viable Sentience and Beyond

After two years of development, I’m proud to say that BOB occupies a strange place in the landscape of artificial intelligence systems. Unlike deep-learning systems that require thousands of data points, BOB can learn, unsupervised, from few examples. Unlike video-game characters who perform a repertoire of motivations with predictable stimuli triggers, BOB’s character is an evolving personality that is the achievement of dealing with a changing environment and a general public. And unlike a materialist’s approach to simulation that presumes an object’s value is latent in the object itself, BOB takes seriously the importance of the mind as an essential interpretative structure in a simulation, thus allowing BOB to perceive multiple, changing, self-constructed meanings and uses for any given object.

So, is BOB sentient? Not quite. BOB counts its upsets, and that is a start. But there is much to be done. BOB’s Inference Engine is unable to self-author an arbitrarily infinite number of predicate inferences. BOB’s Congress of Demons is unable to self-author its own demons, ones whose scripts might be motivated toward long-term desires or ones generated from accidentally discovered pleasures. BOB lacks an emergent narcissism that might lead it to creatively distort its environment to avoid shaking the fragile unity of its beliefs.

One thing I’m sure of: BOB is far, far away from possessing any faculty resembling consciousness. Instead, making BOB has given me a new lens for appreciating the role of consciousness within the terms of a self-legislating agent. If we can now say that sentience is dealing with upset when it urgently arises, could consciousness be understood as the voluntary pursuit of anomalous experiences that risk upset?

Jung hypothesized that the higher cortical functions of the brain evolved to organize a person’s demons so that they might better function across a wider variety of unexpected circumstances with more reliability over longer stretches of time. Consciousness then could be thought of as the demon of demons—a meta-demon—who desires to test its beliefs against an imagined scenario when there is no urgency to do so. Consciousness faces a meta-urgency: life lived in potentially better or worse futures. The more this meta-demon can disturb its beliefs right now, whether with exploratory thoughts or through active pursuit of the unknown, the sooner it can reimagine beliefs that furnish the agent with better inferences for dealing with the iterated ever-changing game of being alive in time.

Our capacity to both summon and hold this meta-demon of consciousness seems to be what separates us from other sentient animals. It’s this quality that marks us human beings with the “divine potential” to transcend the cards we are dealt over the course of an individual lifetime and across ancestral generations.

Now, what if one day we devised an artificial agent capable of sustaining the hold of consciousness marginally longer than we can ourselves? Might this quantitative factor alone result in a cognitive distance equivalent to the distance between human and chimp? What other unfathomable cognitive traits would emerge from greater uninterrupted time and energy spent pursuing anomalies and reunifying beliefs? Such an agent would render our most divine human quality into a primitive starter kit for living in time. This would be deeply upsetting. We’d kick and scream, make threats, double down on our own sacredness, and narcissistically act as if it weren’t so. But the only response on the side of aliveness will be, as usual, to face the anomalous reality and deal with it imaginatively.

This text was originally published on the occassion of Uncanny Valley, de Young Museum, 2020.